by Nathan Hales, guest blogger

I’ve invited Nathan, a Solution Architect and member of our BI&A team at 3Cloud, to share this post based on his recent experience and expertise in this area.

A REAL-WORLD ENTERPRISE-SCALE EXAMPLE

Introduction

In a recent blog post, Paul discussed that DevOps, and specifically CI/CD, principles are essential for modern software development, and Power BI is no exception. Power BI CI/CD for enterprise class projects can be achieved using Azure DevOps, with steps including source control, automated testing, and build and release pipelines.

This post shows a real-world example of a full, enterprise-scale Power BI DevOps implementation. Not sure if your situation merits this setup?

Please consider with me the following questions:

- Do you work collaboratively with other team members to manage large Power BI datasets that each support many reports?

- Do you wish that you had the ability to easily rollback changes to your Power BI models?

- Does your organization require strict audit traceability and separation of duties during model deployment?

- Do you utilize incremental refresh and require targeted deployments of model changes?

If the answer to any of the above questions is yes, then it is likely you fall under the enterprise-scale project category and should consider implementing a setup similar to the example below. If you fall under a different category, the principles below will provide valuable insight even if your application of those principles is adapted to your own environment.

Example Overview

Let us imagine that we are a data analyst on the data team of the New York City Taxi and Limousine Commission (TLC). The team manages a taxi model that provides critical insight into ride durations, peak ride hours, and taxi performance. We have been tasked with modifying the Power BI dataset to include a taxi lookup table that will provide rich descriptive information for report authors.

The detailed steps below explain how to make changes to an existing Power BI model and deploy them to Power BI Service. It involves creating a feature branch, making changes to the model, saving them as a .pbit and JSON folder, committing and publishing the changes to a cloud repository, creating a pull request to merge the feature branch into the main branch, and using a Build & Release pipeline to deploy the changed models to the dev, test, and prod environments in Power BI Service.

Architectural Overview

Process Overview

Prerequisites

Before diving into the example, we need to establish requirements that make this solution work. We will not be going in depth on each of these items, but you should be aware that they are working in the background.

- A Git repository for shared storage of Power BI models along with code that is needed to make the whole integration and deployment process work. In this example we use Azure DevOps Repos. If a branching strategy is defined for your organization, you will want to adapt this example to match that process.

- A pipeline service that can automatically build and test your Power BI models, in addition to deploying them to Power BI Service. Azure DevOps Pipelines is used in this example.

- A service principal (SPN) that has been created and configured with access to Power BI APIs as well as access to the workspaces where the deployments will take place. The SPN is the authority by which the deployments happen.

- Power BI workspaces that are part of Premium Per Capacity or Premium Per User and that have the XMLA endpoint enabled with Read Write.

- Tabular Editor 2 is an open source tool that performs the work of breaking apart the PBIX files into readable JSON, as well as performing the build and release activities through the XMLA endpoint.

- Code to perform build and release activities – in this example we use PowerShell in combination with YAML pipeline definition files.

- Visual Studio Code, connected to the Git repository, will be used to sync changes made in our local machine with Git. Please note that Git needs to be installed on your local machine for this to work.

Model Changes in Power BI to Git Repo

The first step in the process is to create a feature branch in Visual Studio Code to house our planned model changes:

Next, we will open up the .pbit file associated with the model that we would like to change.

Once you have updated any necessary parameters and connected to the data sources, it is time to make updates to the model. In this case, I will be adding a new table: Taxi Lookup. After making your changes, you need to save them back to the Git repository. The save process is done twice – once as a .pbit and once as a JSON folder.

Save as a .pbit:

Save as a JSON folder using Tabular Editor (External Tools):

The next step is to commit the changes that we made to our local branch, and then publish the updated branch to the cloud repository. This is done in Visual Studio Code through commit and publish/sync actions. First, enter a commit message (notice the updated and new files based on our changes) and then hit commit:

Because this is our first commit of the feature branch, the option is to publish the branch. Once the branch is already in the cloud repository, the option will show to sync the branch.

Voila! Now your feature branch is in Azure DevOps, and ready to merge back into the main branch to publish your changes to Power BI Service.

Merge feature branch into main branch

All of this work does not help us if we do not get the updates into the one place that has the ability to publish changes to Power BI Service: the main branch. In order to merge changes into the main branch you will need to create a Pull Request.

Ensure that the pull request is your feature branch into the main branch (or whatever branching strategy your organization practices). Notice that under Files we can see exactly what updates to the main branch will be introduced by merging our feature branch.

Because the .pbit file is an application file, Azure DevOps cannot read it to determine differences between the feature branch version and the main branch version. This is one of the main reasons why the JSON folder is so helpful – JSON is easily parsed, and differences will show clearly.

After adding comments, reviewers, or linking work items from Azure DevOps Boards, create the pull request.

In this example, the main branch has a policy that requires a successful run of the Build Validation pipeline. This pipeline, which is automatically kicked off with a new pull request, runs several important tests to ensure that the updates are valid.

By selecting the Build Validation pipeline from the Pull Request screen, we can see the results of the run for each environment:

Once the Build Validation pipeline runs successfully and required approvals have been obtained, the next step is to complete the pull request.

Once the merge is completed, it automatically triggers the Build & Release pipeline. The Build Stage creates the artifacts that will be deployed to each environment. These artifacts include .bim files generated from the JSON folder of each changed model, deployment scripts, credentials for each data source, and a list of changed models to deploy to each environment. In our example, the only changed model is PBI Networking Demo.

Once the Build Stage succeeds, the Dev Release Stage automatically takes those artifacts and deploys the changed models to the dev workspace in Power BI Service.

All of the release stages perform the same set of activities for each environment. These actions likely include deploying each changed model, setting credentials, and running a targeted refresh of specific tables that need to be updated.

Once the Dev Release Stage completes, the Test and Prod stages are each triggered in order by approval from a reviewer. Please note that in order for a model update to be deployed to the Prod environment in Power BI Service, it passed the tests in the Build Validation pipeline as well as successfully deployed to both the Dev and Test environments.

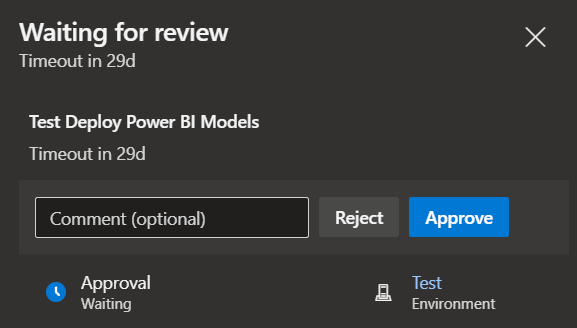

In this example, the approval to deploy to both the Test and Prod stages looks like the following:

After the Build and Release pipeline has run successfully, we can verify the new table, Taxi Lookup, has been added to the production Power BI model:

Solution Benefits

An enterprise-scale Power BI DevOps implementation does require utilizing tools beyond Power BI Desktop, as well as appropriate expertise to set it up correctly. However, the benefits are substantial:

- Frequent, reviewed, automated, and incremental deployments

- Parallel team development

- Full audit trail

- Model rollbacks made easy

If this setup does not make sense for you, then let the principles above guide your processes for Power BI model management, adapted to your own environment.

Nathan Hales (LinkedIn) is a Data Architect at 3Cloud Solutions specializing in Microsoft technologies such as Azure, Power BI, Power Platform, and Analysis Services.

A very useful article and source for learning such high-quality information! I appreciate you sharing this useful information.

Great Post,

We are currently trying to set up our own CI/CD solution. Would you have a demo on how you set up DevOps artefacts (branchs/ pipelines/yaml, etc??).

I am particularly interested to moving from Dev to test and then to Prod. We have a solution already working (maybe not exactly how we want it) for the Dev environemnt deployments, but moving to Test and Prod in a automated way would be interesting.

Very nice! A really nice overview with a real life application.

This application uses a model. I’m assuming that Tabular Editor can be left out? Apart from readability, what other advantage , or even disadvantage would apply here?

Also , I could adapt this example to power bi reports themselves? I see no issues there.

Thanks

Thanks for this nice summary. Will the tabular model definition language then remove the step to have a pbit-file for those that have Power BI Premium available?